In the last few weeks, the tech world has been abuzz with GPT3. There has been a Cambrian explosion in demos that look super cool. A16Z has a great podcast that goes through the details that is a must-listen.

How GPT3 works. A visual thread.

A trained language model generates text.

We can optionally pass it some text as input, which influences its output.

The output is generated from what the model "learned" during its training period where it scanned vast amounts of text.

1/n pic.twitter.com/imM66oyTIC

— Jay Alammar (@JayAlammar) July 21, 2020

You start by providing GPT3 a few example questions and answers that prime the model. After priming you can ask it questions and it correctly (mostly) predicts and generates the right answer. You could think about GPT3 as a super generalized inference model for text. You now how a generalized text-based interface that can understand what you are trying to ask/do well!

I got GPT-3 to start writing my SQL queries for me

p.s. these work against my *actual* database! pic.twitter.com/6RoJewXEEx

— faraaz (@faraaz) July 22, 2020

GPT-3 is going to change the way you work.

Introducing Quick Response by OthersideAI

Automatically write emails in your personal style by simply writing the key points you want to get across

The days of spending hours a day emailing are over!!!

Beta access link in bio! pic.twitter.com/HFjZOgJvR8

— OthersideAI (@OthersideAI) July 22, 2020

This opens up a whole new world in interface design for products.

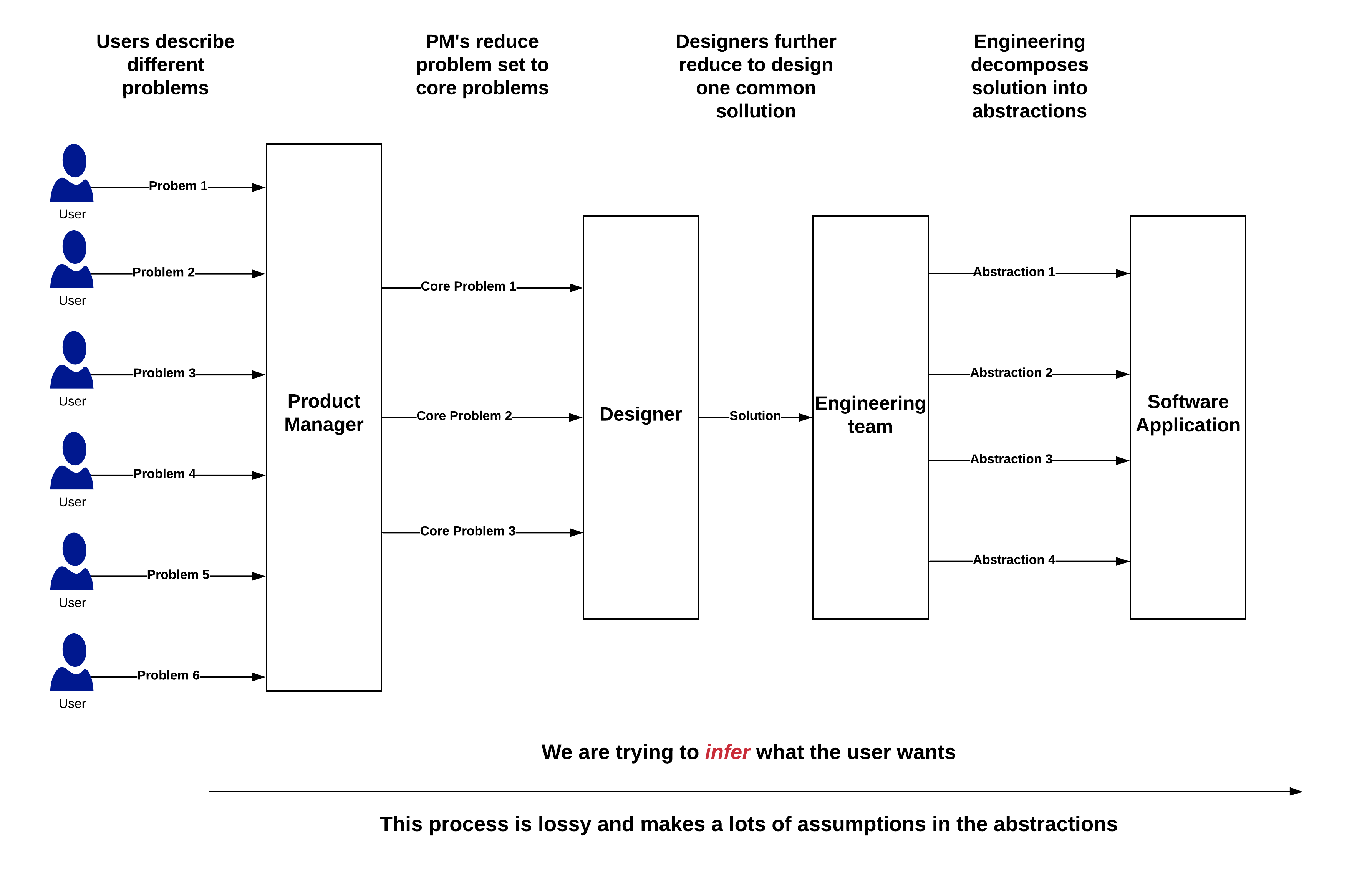

To dig deeper lets first take a tour through how software is designed in the pre-GPT3 world. Software is a tool to accomplish the things a user wants to accomplish, a solution to a problem that users have. Software by itself is not the end, it is the means to the end, its a tool.

So the first question to ask is – what problem does the user want to solve, what does the user want to do? Each user has a slightly different take on their version of the problem and the type of solution that works for them. The range of problems and the range of possible solutions is pretty large.

The art of product management and design is to distill those problems into a smaller set of tractable problems. What is the “real” problem to solve? what does the user want? This distilling process continues through the entire in the solution building process. We think we have figured out the correct intent of the user but the range of solutions can still be vast. So let us apply a similar process and constrain the range of possible solutions and settle on a few. We want to reduce choice for the user as too many choices lead to a bad product experience. We want our solution to be intuitive – less is more.

I call this the reductionist approach.

At its core – we are inferring what the user wants and also inferring the solution to that want. This is the game in the reductionist approach.

I posit that this is a suboptimal process for designing products in general and is an extremely bad model to use for fintech products. Fintech is about money and money is the most personal thing on this planet. Every person has a unique perspective of how they think about money and the problem they are trying to solve with their finances. Everybody has a personal mental model for money. By using the reductionist approach we are trying to take a large range of possible problems and solutions into too narrow a band that is a suboptimal solution for the user.

This reductionist approach also leads to engineering teams building the wrong set of abstractions in code. Since at every step there is an inference/translation occurring, this process is lossy and filled with assumptions. Abstractions are created in software that are messy because the user intent assumptions were incorrect.

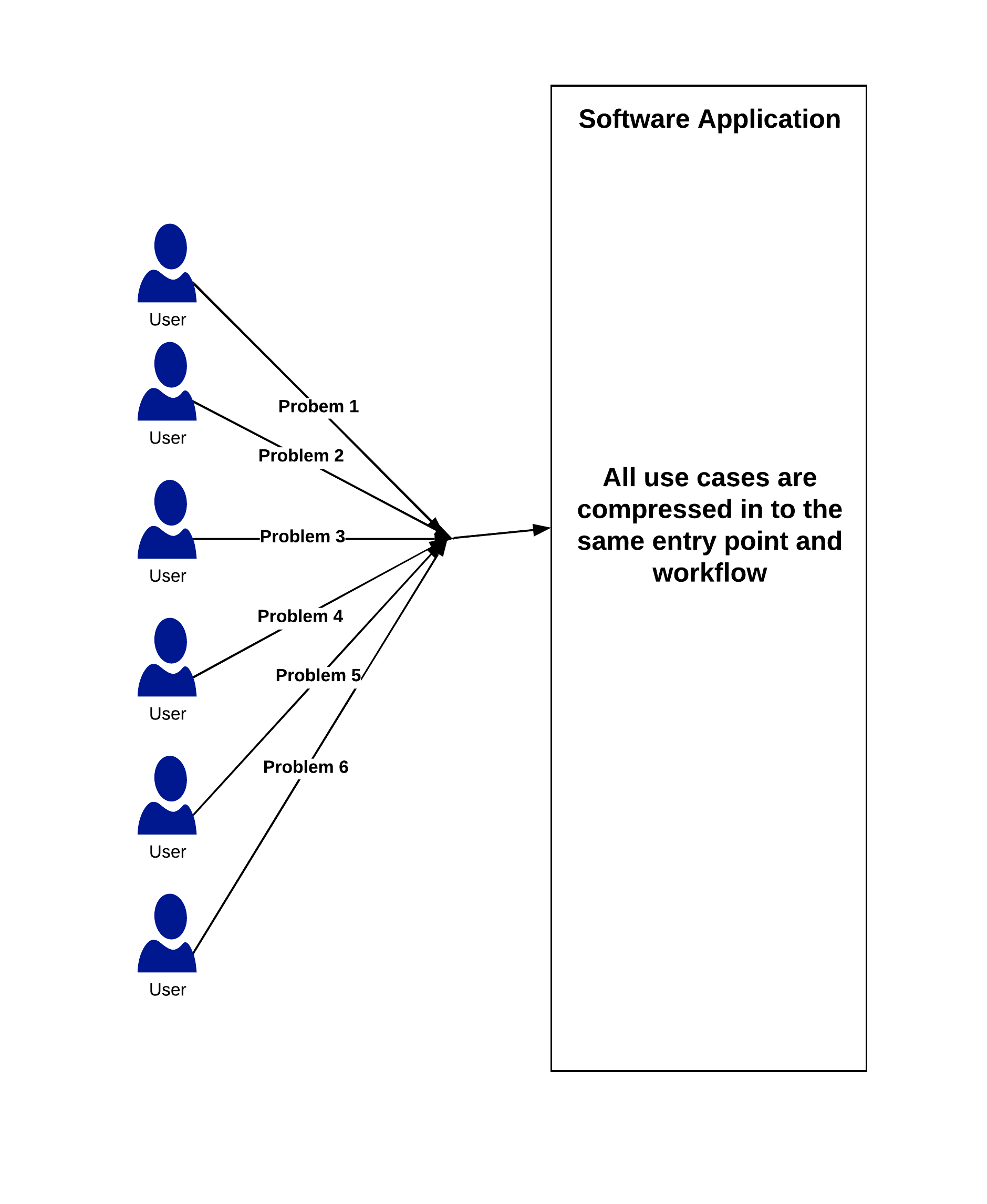

An example to make this concrete. The common pattern in every investment product is to show the current value of your portfolio. The inference here is that as a user the most important thing you care about is how your money is doing, so summary portfolio statistics are front and center. However, there are a lot of different types of investors and they all have different reasons to use the platform. For example, an active trader may want to check the status of his trades or to place more trades. The right first screen for this target persona is to show the status of their trades and the super-fast trade entry. With our view of the most important thing is portfolio statistics the information the trader wants is two clicks/screens deep.

An example to make this concrete. The common pattern in every investment product is to show the current value of your portfolio. The inference here is that as a user the most important thing you care about is how your money is doing, so summary portfolio statistics are front and center. However, there are a lot of different types of investors and they all have different reasons to use the platform. For example, an active trader may want to check the status of his trades or to place more trades. The right first screen for this target persona is to show the status of their trades and the super-fast trade entry. With our view of the most important thing is portfolio statistics the information the trader wants is two clicks/screens deep.

I understand why this pattern is the default pattern. Since a majority of users are going to be non-traders, let’s constrain the solution to serve the majority of users and make portfolio statistics the default view. Its inference all the way down, inference driven by humans. Humans are trying to read other human’s minds.

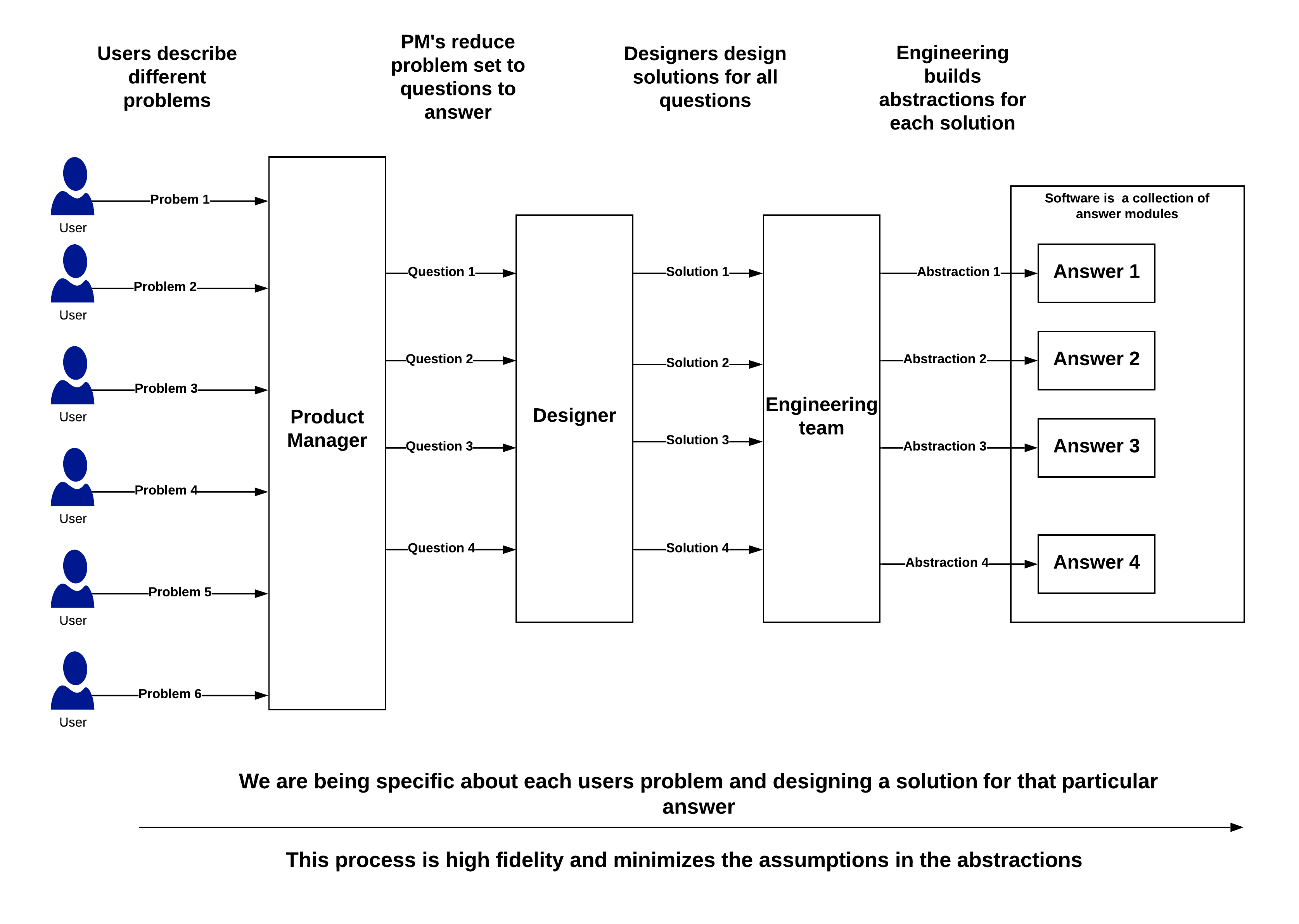

What if GPT3 is used for inference and we convert our thinking from a reductionist to what I call a maximalist approach?

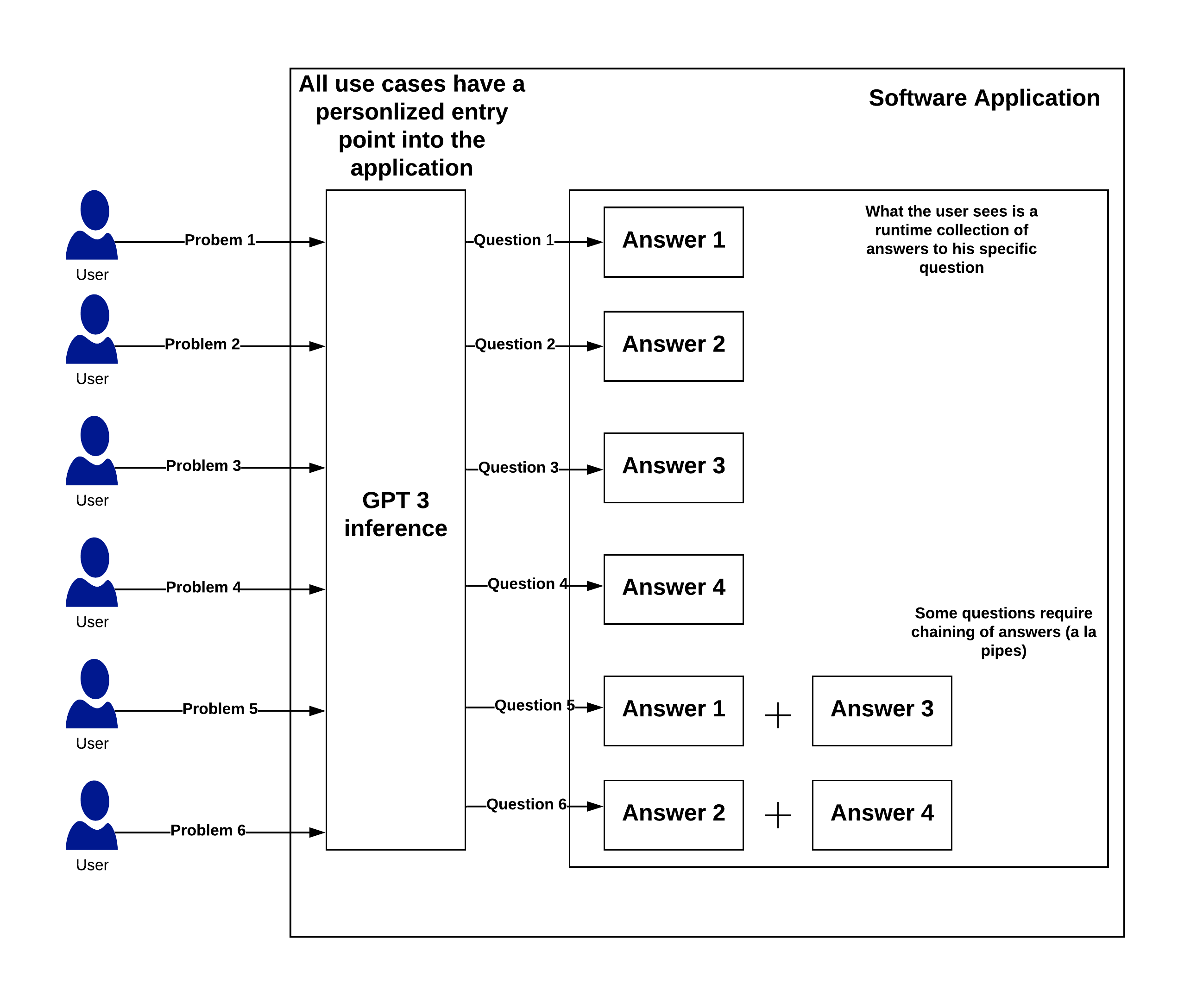

What if the entry point for software is not the home screen but just a text box in which the user can type what he wants? Using GPT3 we can exactly infer what the user wants – hey just told us what he wants! The product management process now changes into trying to figure out a-priori what type of questions the users going to ask. There isn’t a need to reduce it down to a smaller set of questions. We design a solution for each use case. The job of product design and PM now becomes more of a component level thinker. What are the key components of each thing that the user wants to do and how can I design a solution for that one component? In effect the world of engineering abstractions and product design comes closer, there are fewer assumptions. Product, design, and engineering are thinking and executing with the core tenet of the Unix philosophy of software design, ie at the most atomic level, your code should do one thing and one thing well. To accomplish higher-level things, you chain the base level components together to compose the solution. Similarly, product design can follow the same philosophy. You design a widget that does one thing and one thing only and then chain those together as you infer what the user wants.

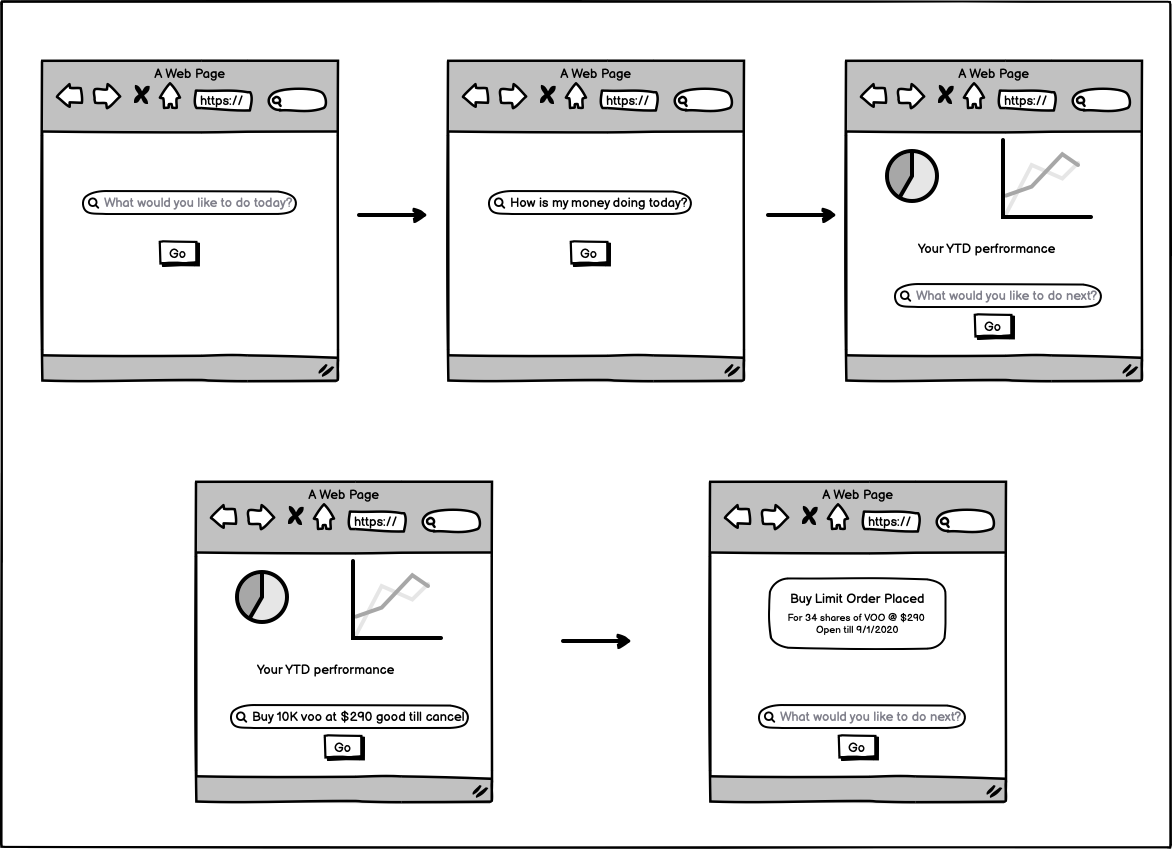

Imagine the home screen of your investment platform that just shows you a text box. What do you want to do today?.

- You type in – “I want to see how my money is doing” ? using GPT 3 you know the user wants to see portfolio statistics ? show them their portfolio stats

- “I want to see my recent trades” ? show the current trade status

- “I want to buy 10k worth of tesla at 1000$ a share” ? Place a Buy limit order for 10 shares of TSLA at $1000.

- “How is my money doing this year “ ? YTD return statistics

- “How will I fare in a recession” ? run a portfolio backtest with prior recessions and present results

GPT 3 makes this transformation from reductionist to maximalist possible. We can now provide a personalized experience to every user of our product.

The maximalist model has ancillary benefits as well. UI discoverability becomes a non-issue. In the reductionist model, a lot of time is spent on figuring out where to introduce new features in the UI. Since there is only one entry point – there are a lot of conflicts/discussions on where new features get their entry point. Should we link off the home page, what’s the tradeoff – what are we replacing? etc etc. We don’t have to worry about that anymore – a feature is shown when the user asks for it!

Maybe this is a long-winded way of saying that – we are going finally going to get the conversational interfaces we deserve! 🙂